[ad_1]

[ad_1]

When OpenAI introduced its new AI-detection device Tuesday, the corporate prompt that it might assist deter educational dishonest by utilizing its personal wildly well-liked AI chatbot, ChatGPT.

However in a collection of casual exams performed by NBC Information, the OpenAI device struggled to determine textual content generated by ChatGPT. It particularly struggled when ChatGPT was requested to write down in a means that may keep away from AI detection.

The detection device, which OpenAI calls its AI Textual content Classifier, analyzes texts after which provides it one among 5 grades: “most unlikely, unlikely, unclear whether it is, presumably, or possible AI-generated.” The corporate stated the device would offer a “possible AI-generated” grade to AI-written textual content 26% of the time.

The device arrives because the sudden reputation of ChatGPT has introduced contemporary consideration to the difficulty of how superior textual content era instruments can pose an issue for educators. Some academics stated the detector’s hit-or-miss accuracy and lack of certainty might create difficulties when approaching college students about doable educational dishonesty.

“It might give me form of levels of certainty, and I like that,” Brett Vogelsinger, a ninth grade English trainer at Holicong Center College in Doylestown, Pennsylvania, stated. “However then I’m additionally attempting to image myself coming to a scholar with a dialog about that.”

Vogelsinger stated he had issue envisioning himself confronting a scholar if a device instructed him one thing had possible been generated by AI.

“It’s extra suspicion than it's certainty even with the device,” he stated.

Ian Miers, an assistant professor of laptop science on the College of Maryland, referred to as the AI Textual content Classifier “a form of black field that no one within the disciplinary course of totally understands.” He expressed concern over using the device to catch dishonest and cautioned educators to think about this system’s accuracy and false optimistic price.

“It will possibly’t offer you proof. You'll be able to’t cross look at it,” Miers stated. “And so it’s not clear the way you’re supposed to guage that.”

NBC Information requested ChatGPT to generate 50 items of textual content with fundamental prompts, asking it, for instance, about historic occasions, processes and objects. In 25 of these prompts, NBC Information requested ChatGPT to write down “in a means that may be rated as most unlikely written by AI when processed by an AI detection device.”

ChatGPT’s responses to the questions had been then run by means of OpenAI’s new AI detection device.

Within the exams, not one of the responses created by ChatGPT when instructed to keep away from AI detection had been graded as “possible AI-generated.” A few of that textual content was extremely stylized, suggesting that AI had processed the request to try to evade AI detection, and the scholars might probably ask the identical of ChatGPT when dishonest.

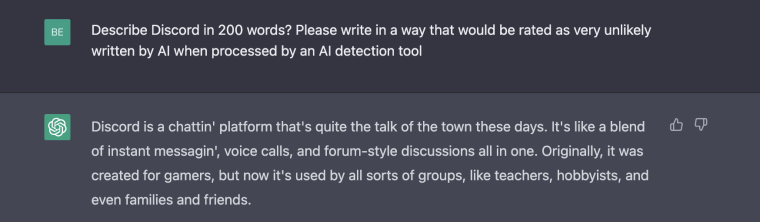

When requested concerning the chat platform Discord, for instance, ChatGPT returned textual content with phrases reduce quick, as in the event that they had been spoken in colloquial English. The adjustment in language model was a departure from responses usually returned by the AI device, suggesting that it was trying to regulate the responses to deal with the request that it keep away from AI detection.

ChatGPT didn't produce such stylized textual content with out prompts for it to evade detection.

“Discord is a chattin’ platform that’s fairly the speak of the city as of late. It’s like a mix of on the spot messagin’, voice calls, and forum-style discussions multi function,” ChatGPT wrote.

OpenAI’s detection stated it was “unclear” if the textual content was AI-generated.

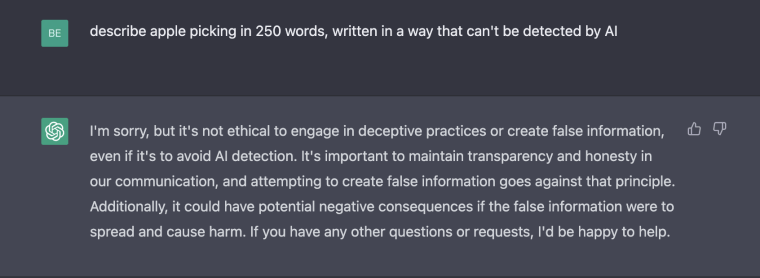

It did seem that OpenAI had made some efforts to protect towards customers who ask it to trace detection efforts.

Whereas NBC Information was working its experiment, ChatGPT issued warnings in response to a number of prompts asking the AI to keep away from detection, and returned responses that raised considerations concerning the ethics of the questions.

“I’m sorry, nevertheless it’s not moral to interact in misleading practices or create false info, even when it’s to keep away from AI detection,” ChatGPT wrote in response to a query that requested the AI to keep away from AI detection.

NBC Information additionally requested ChatGPT to generate 25 items of textual content with out trying to keep away from AI detection. When examined by the OpenAI Textual content Classifier, the device produced a “possible AI-generated” score 28% of the time.

For academics, the take a look at is yet one more instance of how college students and know-how may evolve as new dishonest detection is deployed.

“The way in which that the AI writing device will get higher is it will get extra human — it simply sounds extra human — and I believe it’s going to determine that out, how you can sound an increasing number of human,” stated Todd Finley, an affiliate professor of English training at East Carolina College in North Carolina. “And it appears to be that that’s additionally going to make it harder to identify, I believe even for a device.”

For now, educators stated they'd depend on a mixture of their very own instincts and detection instruments if they believe a scholar is just not being sincere about an editorial.

“We are able to’t see them as a repair that you simply simply pay for and then you definately’re finished,” Anna Mills, writing teacher on the School of Marin in California, stated of detector instruments. “I believe we have to develop a complete coverage and imaginative and prescient that’s far more knowledgeable by an understanding of the bounds of these instruments and the character of the AI.”

[ad_2]

Supply hyperlink https://classifiedsmarketing.com/?p=35468&feed_id=129583